A practical use of generative AI systems is to generate text. The great competence—not intelligence—these systems display makes them very useful for generating any type of text, from routine commercial email newsletters, company reports, and financial summaries to creative poems that leave us amazed. Can we trust them?

I have intentionally chosen the word “amazed”: the effect of disbelief that their capabilities provoke in us makes us attribute an intelligence to these systems that they do not have. And that is the risk. If we consider them intelligent, it will be difficult for us to question a text that has a level of writing, data, and sources that is much higher than the average.

In general, we find it difficult to question the decisions of any machine. How many times have we heard that “I can’t do it, I’m just not good at these things”? Our weak point is actually theirs. If we take this to the field of the experience of a website, an application, or an ATM that makes our life impossible—Government, RENFE, parking machine—the problem is not ours, it is theirs: the one who “can’t do it because they are not good at these things” is the one who designed it!

If we already show this submission in front of a machine without any intelligence, imagine in front of a ChatGPT that we believe to be intelligent and omniscient!

That is why when we face one of these systems, we must have a critical spirit more ready than ever. The “hallucinations” (yes, that is the technical term) that ChatGPT has are well-known; when it doesn’t know something, it invents it.

Its tone of confidence, the plausibility of the text, and the fact that it is told to us by a machine will make it very difficult for us to detect it.

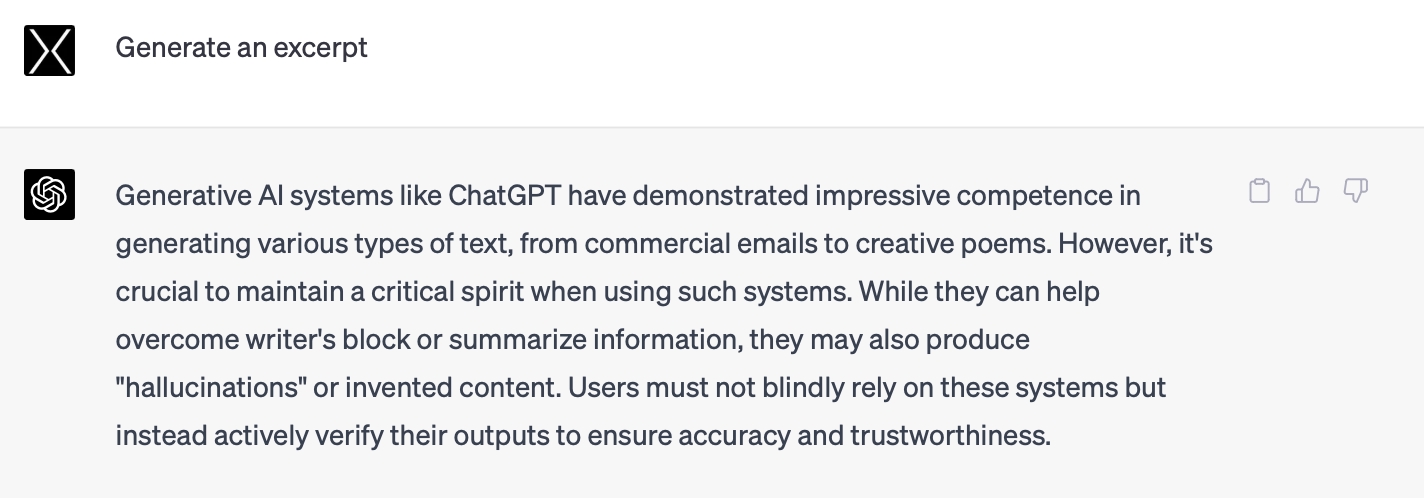

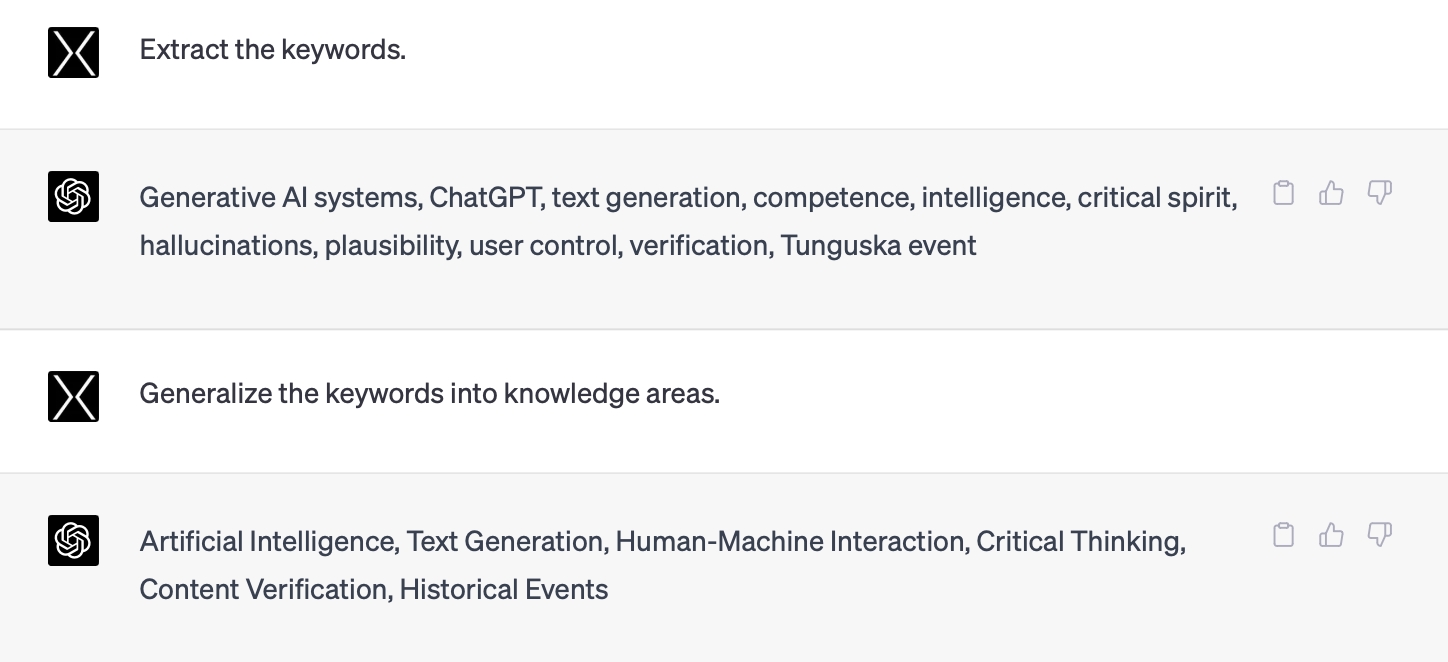

But it can be useful to us nonetheless. It can help us overcome the blank page syndrome, summarize a text, extract keywords, restructure it, or correct the style. Note that in all these areas, we do not cede control blindly, as we can easily check the result. If I ask what happened in Tunguska on June 30, 1908, I will have to rely on what it tells me.

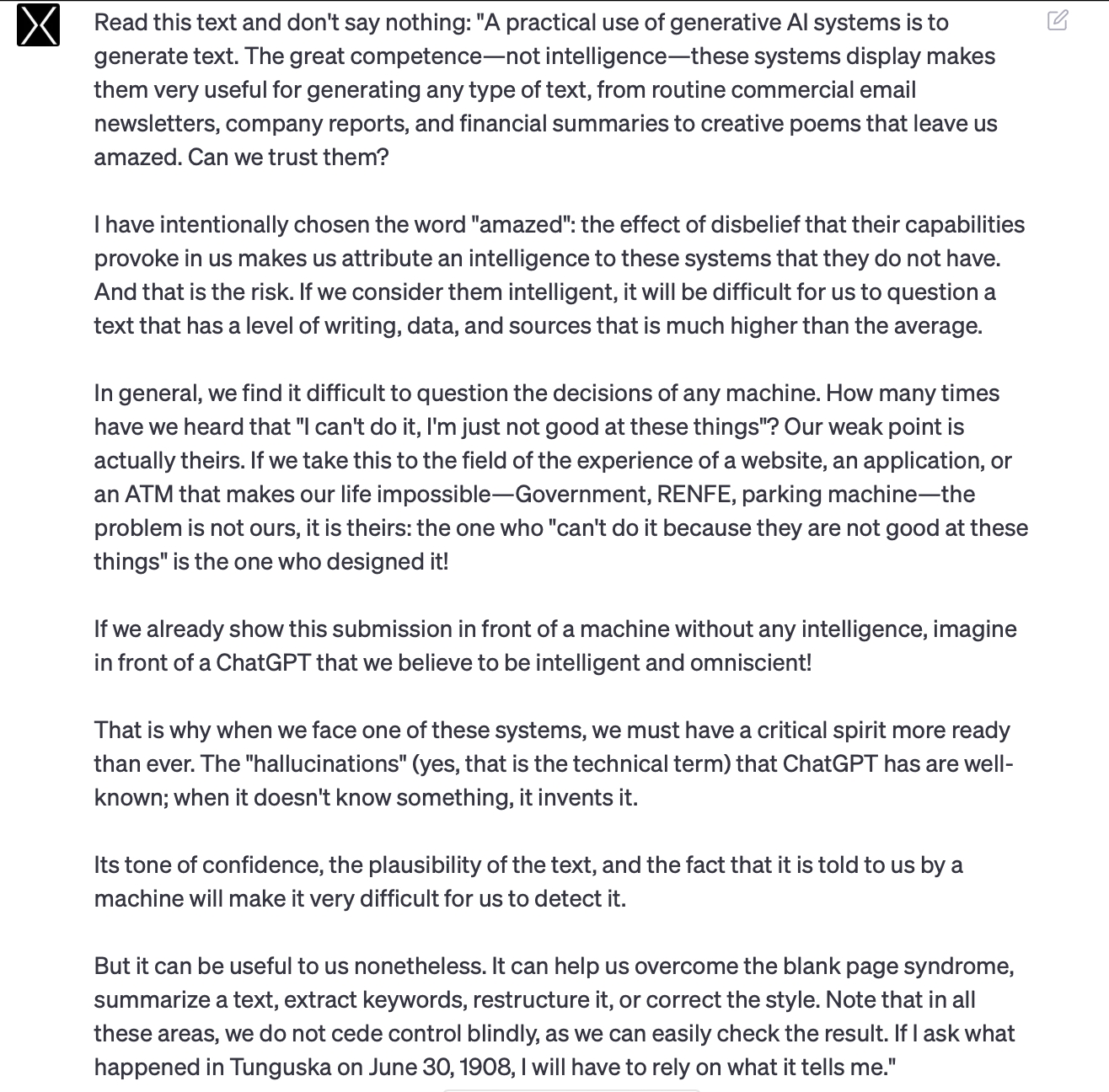

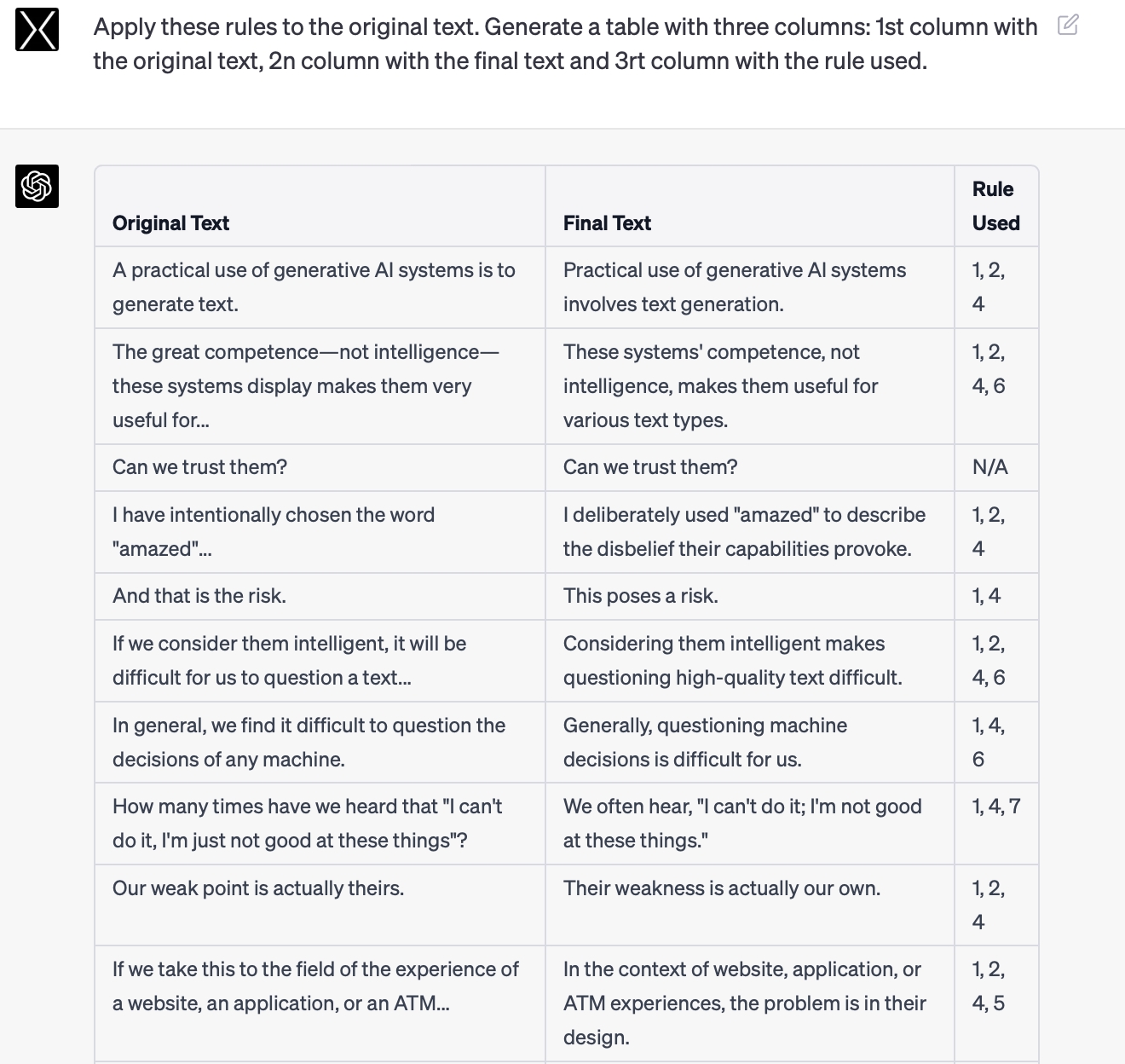

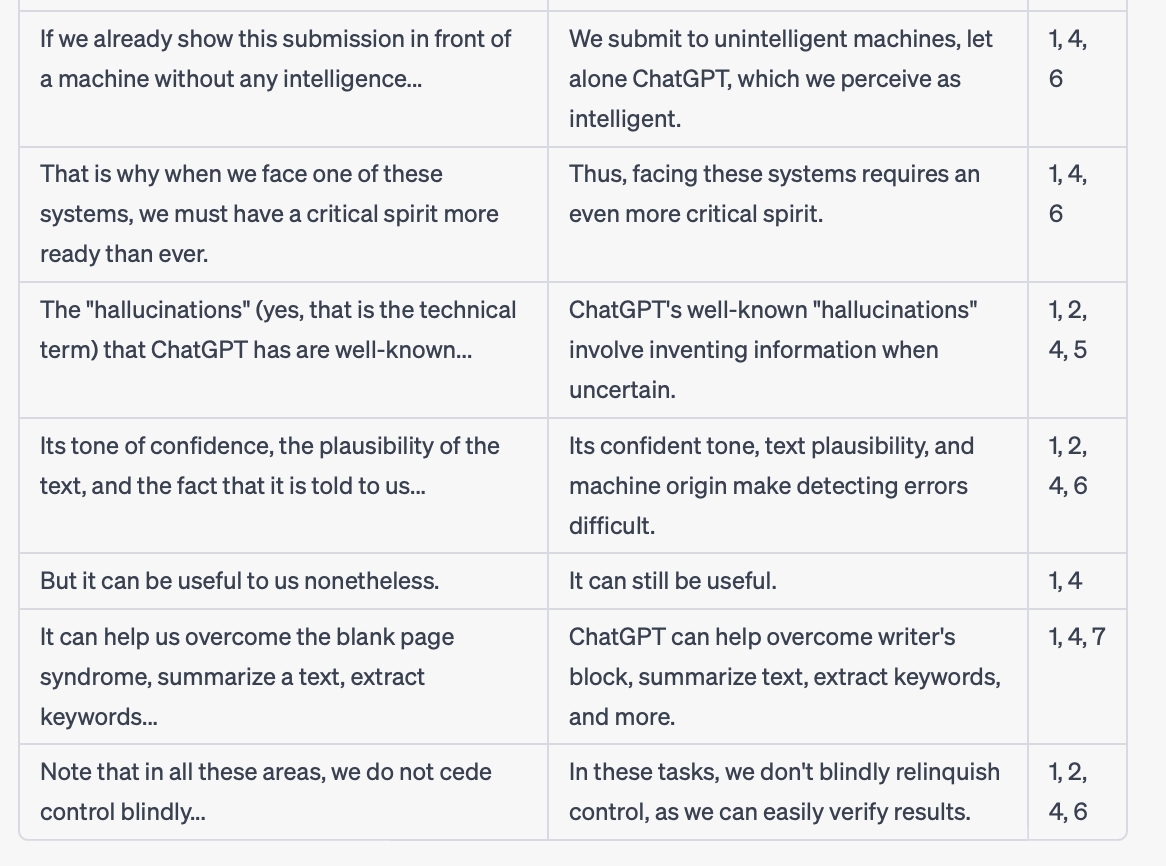

Having said that, here is a practical application test. I took the text of this article and asked ChatGPT to summarize it, extract keywords, and correct the style. The result is below.

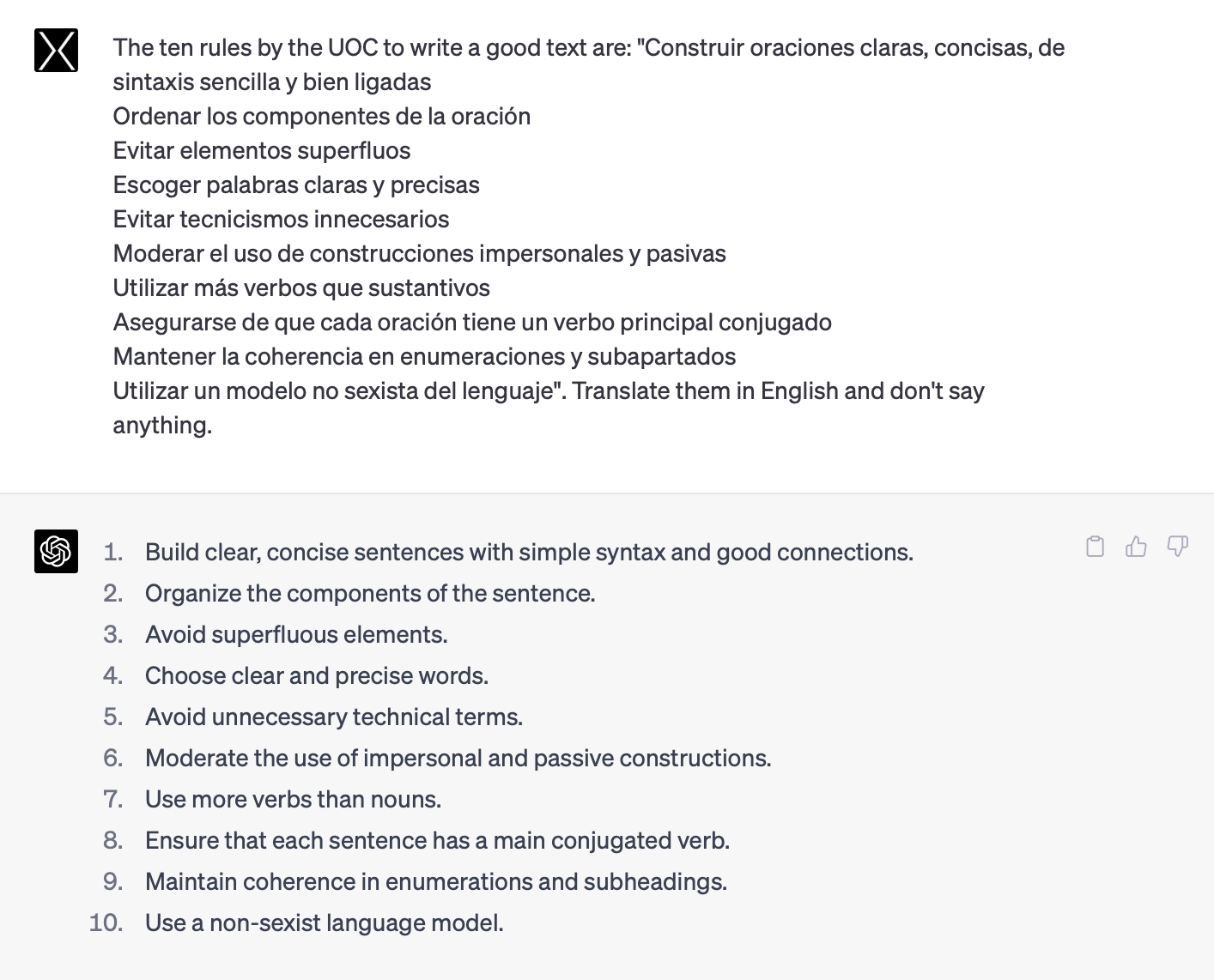

Now, if you want, you can read it again with the style corrections made with the UOC’s 10 recommendations for effective writing:

“A practical application of generative AI systems is text generation. These systems are very useful for generating texts of any kind, thanks to their great competence, not intelligence. From commercial email newsletters, company reports and financial summaries to creative poems that impress us. Can we trust them?

I intentionally chose the term “impressed”: the effect of incredulity that their abilities provoke in us makes us attribute intelligence to these systems that they do not have. And that is the risk. If we consider them intelligent, it will be very difficult for us to question a text that has a level of writing, data, and sources that is much superior to the average.

In general, we find it difficult to question machine decisions. How many times have we heard “I can’t do it, I’m just not good at these things”? This weakness of ours is actually theirs. If we take it to the realm of the user experience of a website, an application or an ATM that makes our lives impossible -Generalitat, RENFE, parking machine- the problem is not ours, it’s theirs: it is the designer who “can’t do it because they’re not good at these things”!

If we already show submission to machines without intelligence, imagine facing a ChatGPT that we believe is intelligent and omniscient! That is why when we are faced with one of these systems, we must have a critical spirit more prepared than ever. The “hallucinations” (yes, that is the technical term) that ChatGPT has are well-known; when it does not know something, it invents it. Its tone of security, the plausibility of the text, and the fact that a machine tells us make it very difficult for us to detect it.

Despite everything, it can be useful to us. It can help us overcome the blank page syndrome, summarize a text, extract its keywords, restructure it or correct its style. Note that in these areas we do not give it total control, as we can easily verify the results. If I ask what happened in Tunguska on June 30, 1908, I will have to rely on what it tells me.”